| Fundamentals of Statistics contains material of various lectures and courses of H. Lohninger on statistics, data analysis and chemometrics......click here for more. |

|

Home  Multivariate Data Multivariate Data  Optimization Optimization  Survey of Methods Survey of Methods  Gradient Descent Methods Gradient Descent Methods |

|

| See also: optimization methods, Simplex Algorithm | |

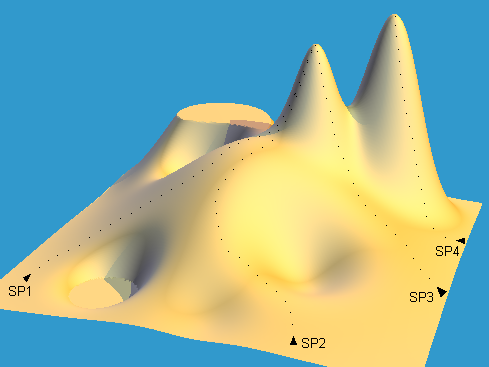

Optimization Methods - Gradient DescentThe idea behind gradient descent, or "hill climbing", methods is to find the maximum or minimum of a response surface by following the gradient, either up or down. One of the big advantages of such a method is that the nearest optimum can be found by only comparatively few calculations. However, gradient descent methods show several drawbacks. One of the most important points is that gradient descent methods do not necessarily find the global optimum. As can be seen from the figure below, whether or not the global optimum is found depends on the starting point .

Another problem with gradient descent methods is that finding the gradient at a particular point of a high-dimensional response surface may require a considerable amount of experiments (in fact one has to test an n-dimensional sphere around the current location, in order to find the direction of the next step). In terms of practical usage, it is recommended to perform a set of independent hill-climbing approaches with different starting conditions. There are several methods available which are based on some kind of

gradient design. One of the more important methods is the simplex

optimization.

|

|

Home  Multivariate Data Multivariate Data  Optimization Optimization  Survey of Methods Survey of Methods  Gradient Descent Methods Gradient Descent Methods |

|