| Fundamentals of Statistics contains material of various lectures and courses of H. Lohninger on statistics, data analysis and chemometrics......click here for more. |

|

Home  Multivariate Data Multivariate Data  Modeling Modeling  Neural Networks Neural Networks  RBF Neural Networks RBF Neural Networks  RBF Neural Networks RBF Neural Networks |

|

| See also: Kernel Estimators | |

RBF Neural NetworksRadius basis function networks (RBF networks) form a special type of

neural networks, which are closely related to density estimation methods.

Some workers in the field do not regard this type as neural networks at

all. A thorough mathematical description of RBF networks is given by Broomhead

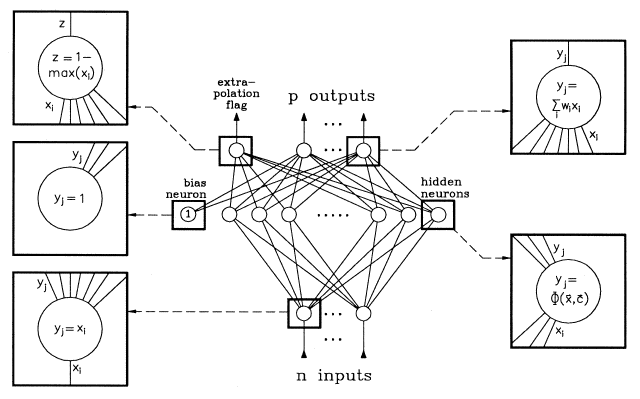

RBF networks have a special architecture in that they have only three

layers (input, hidden, output) and there is only one layer where the neurons

show a nonlinear response. In addition, other authors have suggested including

some extra neurons which serve to calculate the reliability of the output

signals (extrapolation flag).

The input layer has, as in many other network models, no calculating power and serves only to distribute the input data among the hidden neurons. The hidden neurons show a non-linear transfer function which is derived from Gaussian bell curves. The output neurons in turn have a linear transfer function which makes it possible to simply calculate the optimum weights associated with these neurons.

|

|

Home  Multivariate Data Multivariate Data  Modeling Modeling  Neural Networks Neural Networks  RBF Neural Networks RBF Neural Networks  RBF Neural Networks RBF Neural Networks |

|